Let’s get ethical!

Bachelor Thesis

436 Pages

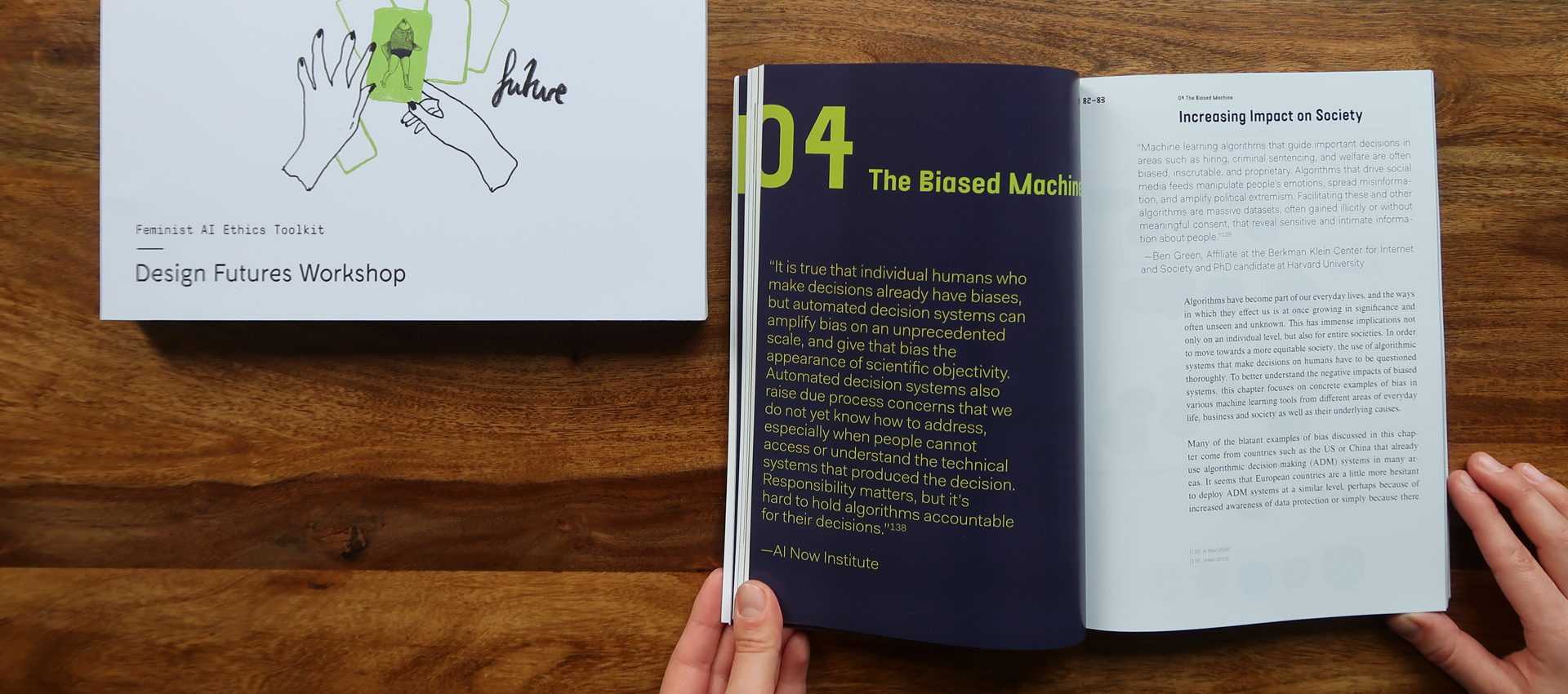

The rapid rise of algorithm-based processes and systems in various parts of society and our everyday life demand that we critically consider their social impacts at all levels and early on. Products and services driven by AI technology provide the opportunity to support and enhance human capabilities and to relieve us of annoying repetitive work. We created artificially intelligent machines to make fair and automated decisions on humans – only to find that their decisions perpetuate our societies’ structural discrimination, oppression and injustice.

If we want to use algorithmic based technologies we must attempt to uncover latent social impacts early on, rather than after foundations have been laid. Many design decisions made by software engineers are very hard to undo or take back. Once they have reached a critical mass and other systems are built on top of them, they often remain integrated in future systems.

When looking at who is shaping the profound design process of algorithmic decision-making tools, one has to realize it is rarely designers (those who professionally create products and services) and sociologists (those who do research on social justice and oppression), and hardly ever the people who are marginalized in today's society.

Computer scientists, who often only look at the technical implications of this complex problem, cannot be left alone with the immense responsibility of answering profound ethical questions in a world where they often don’t have sufficient training and awareness for the structural dimensions of the problem yet.

It is tempting to approach biases in machine learning with technology. However, explainable AI, AI ethics principles and bias checklists can still lead to unethical AI that strengthens injustice.

To properly address these matters it is first of all necessary that data scientists recognize their work as political action, with respect to collective social processes influencing rights, status and resources across society. (Green, Ben. 2018. Data Science as Political Action – Grounding Data Science in a Politics of Justice)

Our technologies are not good or bad per se - very many can represent a dual-use dilemma (research or technology that can both benefit and harm at the same time). The question then is what priority we give to the benefits and to the harms – and who has the power to decide who benefits and who is harmed.

We now urgently need to draw on social justice knowledge. What we need are intersectional feminist perspectives on AI.

What are intersectional feminist perspectives? What does gender have to do with it? Feminism isn't just about gender. Feminism is, to quote Reni Eddo-Lodge, "a movement that works to liberate all people who have been economically, socially, and culturally marginalized by an ideological system that has been designed for them to fail." (Eddo-Lodge, Reni. 2017. Why I’m No Longer Talking to White People About Race)

In Feminist AI Ethics, we not only ask ourselves whether an automated tool discriminates against people in a certain group, we also ask ourselves whether the tool promotes (or at least doesn't go against) social justice and equity.

If we want to use algorithmic based technologies we must attempt to uncover latent social impacts early on, rather than after foundations have been laid. Many design decisions made by software engineers are very hard to undo or take back. Once they have reached a critical mass and other systems are built on top of them, they often remain integrated in future systems.

When looking at who is shaping the profound design process of algorithmic decision-making tools, one has to realize it is rarely designers (those who professionally create products and services) and sociologists (those who do research on social justice and oppression), and hardly ever the people who are marginalized in today's society.

Computer scientists, who often only look at the technical implications of this complex problem, cannot be left alone with the immense responsibility of answering profound ethical questions in a world where they often don’t have sufficient training and awareness for the structural dimensions of the problem yet.

It is tempting to approach biases in machine learning with technology. However, explainable AI, AI ethics principles and bias checklists can still lead to unethical AI that strengthens injustice.

To properly address these matters it is first of all necessary that data scientists recognize their work as political action, with respect to collective social processes influencing rights, status and resources across society. (Green, Ben. 2018. Data Science as Political Action – Grounding Data Science in a Politics of Justice)

Our technologies are not good or bad per se - very many can represent a dual-use dilemma (research or technology that can both benefit and harm at the same time). The question then is what priority we give to the benefits and to the harms – and who has the power to decide who benefits and who is harmed.

We now urgently need to draw on social justice knowledge. What we need are intersectional feminist perspectives on AI.

What are intersectional feminist perspectives? What does gender have to do with it? Feminism isn't just about gender. Feminism is, to quote Reni Eddo-Lodge, "a movement that works to liberate all people who have been economically, socially, and culturally marginalized by an ideological system that has been designed for them to fail." (Eddo-Lodge, Reni. 2017. Why I’m No Longer Talking to White People About Race)

In Feminist AI Ethics, we not only ask ourselves whether an automated tool discriminates against people in a certain group, we also ask ourselves whether the tool promotes (or at least doesn't go against) social justice and equity.

🦄 All Projects

“All that you touch, you change. All that you change, changes you.” — Octavia E. Butler